Kubernetes is an open-source system for automating the deployment, scaling, and management of containerized applications.

Helm chart

N3uron is available as a Helm chart to streamline deployments in Kubernetes:

Step 1: Add the N3uron Helm repository:

helm repo add n3uron https://dl.n3uron.com/helm-chartsStep 2: Deploy N3uron:

The N3uron Helm chart supports two types of deployment: standalone, for deploying a single N3uron instance, and redundant, for deploying two N3uron instances with high availability and fault tolerance.

The following command deploys N3uron in standalone mode.

helm install n3uron n3uron/n3uron --set deploymentMode=standalone,adminPassword=n3uronNAME: n3uron

LAST DEPLOYED: Thu Dec 5 16:32:42 2024

NAMESPACE: default

STATUS: deployed

REVISION: 1Step 3: Access the N3uron WebUI:

kubectl get allNAME READY STATUS RESTARTS AGE

pod/n3uron-0 1/1 Running 0 16s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 121d

service/n3uron-webui ClusterIP 10.104.106.192 <none> 8003/TCP 17s

NAME READY AGE

statefulset.apps/n3uron 1/1 17sUsing port forwarding we can access port 8003 in the N3uron container from our machine at port 8003, after running the command you can navigate to http://localhost:8003

kubectl port-forward pod/n3uron-0 8003:8003Standalone deployment

Deploy a single N3uron instance to Kubernetes.

Step 1: Create a secret to store the credentials to access the N3uron WebUI.

# n3uron-credentials.yaml

apiVersion: v1

kind: Secret

metadata:

name: n3uron-credentials

type: Opaque

stringData:

admin-password: <secure-password>kubectl apply -f n3uron-credentials.yamlStep 2: Create a StatefulSet to deploy N3uron with persistent storage.

# n3uron-statefulset.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: n3uron

spec:

serviceName: n3uron

selector:

matchLabels:

app: n3uron

template:

metadata:

labels:

app: n3uron

spec:

containers:

- name: n3uron

image: n3uronhub/n3uron:v1.22

env:

- name: HOSTNAME

value: n3uron-k8s-01 # Set the hostname for licensing

- name: ADMIN_PASSWORD

valueFrom:

secretKeyRef:

name: n3uron-credentials

key: admin-password

volumeMounts:

- name: n3-config

mountPath: /opt/n3uron/config

subPath: config

- name: n3-data

mountPath: /opt/n3uron/data

- name: n3-log

mountPath: /opt/n3uron/log

- name: n3-licenses

mountPath: /opt/n3uron/licenses

securityContext:

capabilities:

# The CAP_SYS_ADMIN capability is required to enforce a static hostname

# inside the container, otherwise licensing may not work.

add: ["CAP_SYS_ADMIN"]

ports:

- containerPort: 8003

volumeClaimTemplates:

- metadata:

name: n3-config

spec:

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 1Gi

- metadata:

name: n3-data

spec:

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 10Gi

- metadata:

name: n3-log

spec:

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 10Gi

- metadata:

name: n3-licenses

spec:

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 1Gikubectl apply -f n3uron-statefulset.yamlStep 3: Verify the deployment has been successful:

kubectl get allThere should be a running pod named n3uron-0

NAME READY STATUS RESTARTS AGE

pod/n3uron-0 1/1 Running 0 75s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 79m

NAME READY AGE

statefulset.apps/n3uron 1/1 75sStep 4: Access the N3uron WebUI using port forwarding, with the following command we can access port 8003 in the N3uron container from our machine at port 8003, after running the command you can navigate to http://localhost:8003

kubectl port-forward pod/n3uron-0 8003:8003Standalone deployment with Historian

Deploy a single N3uron instance with a MongoDB database for Historian.

Step 1: Create a secret to store the credentials to access the N3uron WebUI.

# n3uron-credentials.yaml

apiVersion: v1

kind: Secret

metadata:

name: n3uron-credentials

type: Opaque

stringData:

admin-password: <secure-password>kubectl apply -f n3uron-credentials.yamlStep 2: Create a StatefulSet to deploy N3uron with persistent storage.

# n3uron-statefulset.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: n3uron

spec:

serviceName: n3uron

selector:

matchLabels:

app: n3uron

template:

metadata:

labels:

app: n3uron

spec:

containers:

- name: n3uron

image: n3uronhub/n3uron:v1.22

env:

- name: HOSTNAME

value: n3uron-k8s-01 # Set the hostname for licensing

- name: ADMIN_PASSWORD

valueFrom:

secretKeyRef:

name: n3uron-credentials

key: admin-password

volumeMounts:

- name: n3-config

mountPath: /opt/n3uron/config

subPath: config

- name: n3-data

mountPath: /opt/n3uron/data

- name: n3-log

mountPath: /opt/n3uron/log

- name: n3-licenses

mountPath: /opt/n3uron/licenses

securityContext:

capabilities:

# The CAP_SYS_ADMIN capability is required to enforce a static hostname

# inside the container, otherwise licensing may not work.

add: ["CAP_SYS_ADMIN"]

ports:

- containerPort: 8003

volumeClaimTemplates:

- metadata:

name: n3-config

spec:

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 1Gi

- metadata:

name: n3-data

spec:

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 10Gi

- metadata:

name: n3-log

spec:

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 10Gi

- metadata:

name: n3-licenses

spec:

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 1Gikubectl apply -f n3uron-statefulset.yamlStep 3: Create a StatefulSet to deploy the MongoDB database required by Historian:

# mongodb-statefulset.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: mongo

spec:

selector:

matchLabels:

app: mongo

serviceName: mongo

template:

metadata:

labels:

app: mongo

spec:

containers:

- name: mongo

image: mongo:8.2

ports:

- containerPort: 27017

volumeMounts:

- name: data

mountPath: /data/db

volumeClaimTemplates:

- metadata:

name: data

spec:

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 10Gikubectl apply -f mongo-statefulset.yamlStep 4: Create a Service to allow access to MongoDB from N3uron:

# mongodb-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: mongo

namespace: n3uron-test

spec:

clusterIP: None

selector:

app: mongo

ports:

- port: 27017

targetPort: 27017kubectl apply -f mongo-svc.yamlStep 5: Verify that all resources have been created.

kubectl get allNAME READY STATUS RESTARTS AGE

pod/mongo-0 1/1 Running 1 (8m4s ago) 51m

pod/n3uron-0 1/1 Running 2 (8m ago) 20h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 21h

service/mongo ClusterIP None <none> 27017/TCP 51m

NAME READY AGE

statefulset.apps/mongo 1/1 51m

statefulset.apps/n3uron 1/1 20hStep 4: Access the N3uron WebUI using port forwarding, with the following command we can access port 8003 in the N3uron container from our machine at port 8003, after running the command you can navigate to http://localhost:8003

kubectl port-forward pod/n3uron-0 8003:8003Step 6: Verify the deployment has been successful:

kubectl get all -n n3uron-testThere should be a Pod named n3uron-0

NAME READY STATUS RESTARTS AGE

pod/n3uron-0 1/1 Running 0 75s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 79m

NAME READY AGE

statefulset.apps/n3uron 1/1 75sStep 7: Access the N3uron WebUI using port forwarding, with the following command we can access port 8003 at the N3uron container from our machine at port 8003, after running the command you can navigate to http://localhost:8003

kubectl port-forward pod/n3uron-0 8003:8003 -n n3uron-testStep 8: Create a file mongo-statefulset.yaml with the following content:

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: mongo

namespace: n3uron-test

spec:

selector:

matchLabels:

app: mongo

serviceName: "mongo"

template:

metadata:

labels:

app: mongo

spec:

containers:

- name: mongo

image: mongo:8.2

ports:

- containerPort: 27017

volumeMounts:

- name: data

mountPath: /data/db

volumeClaimTemplates:

- metadata:

name: data

spec:

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 10GiStep 9: Apply the manifest to deploy MongoDB:

kubectl apply -f mongo-statefulset.yamlStep 10: Create a file mongo-svc.yaml with the following content:

apiVersion: v1

kind: Service

metadata:

name: mongo

namespace: n3uron-test

spec:

clusterIP: None

selector:

app: mongo

ports:

- port: 27017

targetPort: 27017Step 11: Deploy the service manifest to Kubernetes, this will create an endpoint to access the MongoDB database from N3uron.

kubectl apply -f mongo-svc.yamlStep 12: Verify that all resources have been created.

kubectl get all -n n3uron-testThere is a new pod mongo-0 and a service mongo.

NAME READY STATUS RESTARTS AGE

pod/mongo-0 1/1 Running 1 (8m4s ago) 51m

pod/n3uron-0 1/1 Running 2 (8m ago) 20h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 21h

service/mongo ClusterIP None <none> 27017/TCP 51m

NAME READY AGE

statefulset.apps/mongo 1/1 51m

statefulset.apps/n3uron 1/1 20hHistorian configuration

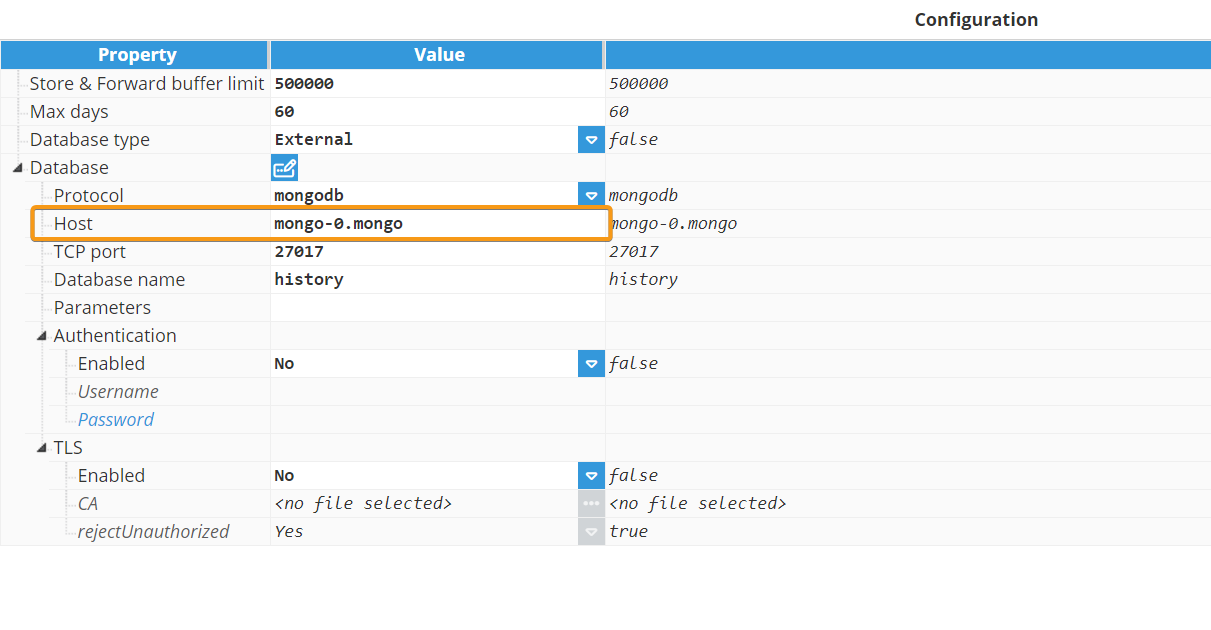

To access the MongoDB database from the N3uron Historian module we need to select External as Database type and set the Host field to mongo-0.mongo, this address is constructed with the Pod name (mongo-0) and the Service name (mongo).

Redundant deployment with Historian (Replica Set)

Deploy two redundant N3uron instances with a MongoDB replica set for high availability.

Step 1: Create a new namespace for N3uron.

kubectl create namespace n3uron-testStep 2: Install the MongoDB Kubernetes Operator using Helm.

helm repo add mongodb https://mongodb.github.io/helm-charts

helm install community-operator mongodb/community-operator --namespace n3uron-testStep 3: Create a secret to securely store the credentials to access the N3uron WebUI. Put the following content in a file named n3uron-credentials.yaml then set the desired passwords.

apiVersion: v1

kind: Secret

metadata:

name: n3uron-credentials

namespace: n3uron-test

type: Opaque

stringData:

admin-password: <secure-password>

mongo-password: <another-secure-password>Step 4: Apply the manifest to create the resource.

kubectl apply -f n3uron-credentials.yamlStep 5: Create a file named n3uron-statefulset.yaml with the following content:

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: n3uron

namespace: n3uron-test

spec:

serviceName: "n3uron"

replicas: 2

selector:

matchLabels:

app: n3uron

template:

metadata:

labels:

app: n3uron

spec:

containers:

- name: n3uron

image: n3uronhub/n3uron:v1.22

env:

- name: HOSTNAME

valueFrom:

fieldRef:

fieldPath: metadata.name # Use the pod's name as the hostname for licensing

- name: ADMIN_PASSWORD

valueFrom:

secretKeyRef:

name: n3uron-credentials

key: admin-password

volumeMounts:

- name: n3-config

mountPath: /opt/n3uron/config

subPath: config

- name: n3-data

mountPath: /opt/n3uron/data

- name: n3-log

mountPath: /opt/n3uron/log

- name: n3-licenses

mountPath: /opt/n3uron/licenses

securityContext:

capabilities:

# The CAP_SYS_ADMIN capability is required to enforce a static hostname

# inside the container, otherwise licensing may not work.

add: ["CAP_SYS_ADMIN"]

ports:

- name: webui

containerPort: 8003

- name: redundancy

containerPort: 3002

volumeClaimTemplates:

- metadata:

name: n3-config

spec:

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 1Gi

- metadata:

name: n3-data

spec:

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 10Gi

- metadata:

name: n3-log

spec:

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 5Gi

- metadata:

name: n3-licenses

spec:

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 1GiStep 6: Apply the manifest to deploy N3uron:

kubectl apply -f n3uron-statefulset.yamlStep 7: Verify the deployment has been successful:

kubectl get all -n n3uron-testWe have the MongoDB operator deployed and two N3uron pods running, n3uron-0 and n3uron-1

NAME READY STATUS RESTARTS AGE

pod/mongodb-kubernetes-operator-558d9545b8-hn5kf 1/1 Running 0 2m29s

pod/n3uron-0 1/1 Running 0 10s

pod/n3uron-1 1/1 Running 0 8s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/mongodb-kubernetes-operator 1/1 1 1 2m29s

NAME DESIRED CURRENT READY AGE

replicaset.apps/mongodb-kubernetes-operator-558d9545b8 1 1 1 2m29s

NAME READY AGE

statefulset.apps/n3uron 2/2 10sStep 8: Create a Service to allow the N3uron-to-N3uron communication required by the redundancy mode. Put the following definition in a file named n3uron-svc.yaml.

apiVersion: v1

kind: Service

metadata:

name: n3uron

namespace: n3uron-test

spec:

clusterIP: None

selector:

app: n3uron

ports:

- port: 3002

targetPort: redundancykubectl apply -f n3uron-svc.yamlStep 9: Create a custom MongoDBCommunity resource to deploy a MongoDB replica-set cluster. Save the content into mongodb-cluster.yaml.

apiVersion: mongodbcommunity.mongodb.com/v1

kind: MongoDBCommunity

metadata:

name: historian-db

namespace: n3uron-test

spec:

members: 3

type: ReplicaSet

version: "8.2"

security:

authentication:

modes: ["SCRAM"]

users:

- name: n3uron

db: history

passwordSecretRef:

name: n3uron-credentials

key: db-password

roles:

- name: userAdminAnyDatabase

db: history

- name: readWrite

db: history

scramCredentialsSecretName: n3uronStep 10: Apply the manifest to deploy the MongoDB replica-set cluster, it can take a few minutes to complete the deployment:

kubectl apply -f mongodb-cluster.yamlStep 11: Verify the deployment has been successful:

kubectl get all -n n3uron-testNow we have three pods of MongoDB already configured to run as a replica set: historian-db-0, historian-db-1 and historian-db-2.

NAME READY STATUS RESTARTS AGE

pod/historian-db-0 2/2 Running 0 2m28s

pod/historian-db-1 2/2 Running 0 96s

pod/historian-db-2 2/2 Running 0 64s

pod/mongodb-kubernetes-operator-558d9545b8-hn5kf 1/1 Running 1 28m

pod/n3uron-0 1/1 Running 0 17m

pod/n3uron-1 1/1 Running 0 17m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/historian-db-svc ClusterIP None <none> 27017/TCP 6m47s

service/n3uron ClusterIP None <none> 3002/TCP 19m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/mongodb-kubernetes-operator 1/1 1 1 28m

NAME DESIRED CURRENT READY AGE

replicaset.apps/mongodb-kubernetes-operator-558d9545b8 1 1 1 28m

NAME READY AGE

statefulset.apps/historian-db 3/3 2m28s

statefulset.apps/historian-db-arb 0/0 2m28s

statefulset.apps/n3uron 2/2 17mStep 12: Retrieve the connection string to access the MongoDB cluster. This data is stored in a Kubernetes secret created by the MongoDB operator with the name <metadata.name>-<auth-db>-<username> in our case is historian-db--n3uron

kubectl get secret historian-db-history-n3uron -n n3uron-test -o json{

"apiVersion": "v1",

"data": {

"connectionString.standard": "bW9uZ29kYjovL24zdXJvbjptdXlzZWNyZXRvQGhpc3Rvcmlhbi1kYi0wLmhpc3Rvcmlhbi1kYi1zdmMubjN1cm9uLXRlc3Quc3ZjLmNsdXN0ZXIubG9jYWw6MjcwMTcsaGlzdG9yaWFuLWRiLTEuaGlzdG9yaWFuLWRiLXN2Yy5uM3Vyb24tdGVzdC5zdmMuY2x1c3Rlci5sb2NhbDoyNzAxNyxoaXN0b3JpYW4tZGItMi5oaXN0b3JpYW4tZGItc3ZjLm4zdXJvbi10ZXN0LnN2Yy5jbHVzdGVyLmxvY2FsOjI3MDE3L2hpc3Rvcnk/cmVwbGljYVNldD1oaXN0b3JpYW4tZGImc3NsPWZhbHNl",

"connectionString.standardSrv": "bW9uZ29kYitzcnY6Ly9uM3Vyb246bXV5c2VjcmV0b0BoaXN0b3JpYW4tZGItc3ZjLm4zdXJvbi10ZXN0LnN2Yy5jbHVzdGVyLmxvY2FsL2hpc3Rvcnk/cmVwbGljYVNldD1oaXN0b3JpYW4tZGImc3NsPWZhbHNl",

...

},

"kind": "Secret",

...

}After decoding the content in base64 we get the connection strings in plain text:

{

"apiVersion": "v1",

"data": {

"connectionString.standard": "mongodb://n3uron:secure-passwd@historian-db-0.historian-db-svc.n3uron-test.svc.cluster.local:27017,historian-db-1.historian-db-svc.n3uron-test.svc.cluster.local:27017,historian-db-2.historian-db-svc.n3uron-test.svc.cluster.local:27017/history?replicaSet=historian-db&ssl=false",

"connectionString.standardSrv": "mongodb+srv://n3uron:secure-passwd@historian-db-svc.n3uron-test.svc.cluster.local/history?replicaSet=historian-db&ssl=false",

...

},

"kind": "Secret",

...

}Step 13: Access and configure each N3uron node with redundancy, see Redundancy.

To access the primary node WebUI:

kubectl port-forward pod/n3uron-0 8003:8003 -n n3uron-testTo access the backup node WebUI:

kubectl port-forward pod/n3uron-1 8003:8003 -n n3uron-testUse the n3uron-0.n3uron address as Host in the backup node configuration, if the connection is established successfuly you’ll see the text (Standby) in the System section.

.png)

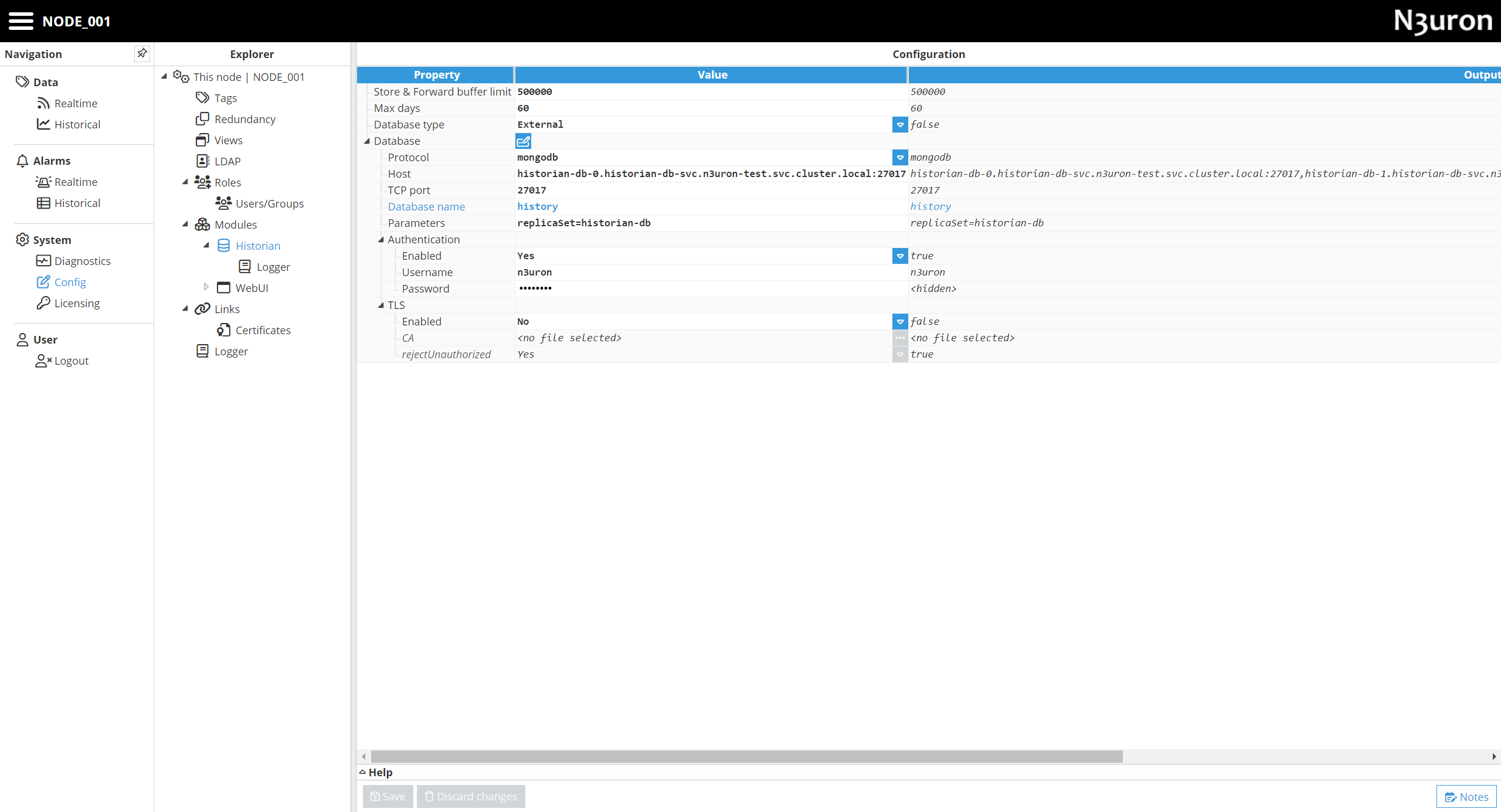

Step 14: Configure the Historian module to use the MongoDB replicaset as database. In this example we’ll use the Standard connection string.

.png)

This is the resulting configuration for the Historian module:

At this point you’ll have a fault-tolerant and redundant N3uron deployment with a Historian database backed by multiple MongoDB replicas.